If you’ve ever chatted with ChatGPT and found yourself thinking, “What does the ‘T’ stand for?” you’re not alone. No, it’s not “Talkative” (although I do love a good conversation), nor is it “Tacos” (unfortunately).

Ever wondered how Siri understands your commands or how Google Translate makes sense of gibberish in one language and spits out perfect sentences in another?

<< Okay fine! It’s time to reveal the elephant in the room >> TUDUMMMMM….🎊

The “T” in ChatGPT stands for “Transformers” 🤖 i.e. GPT (Generative Pre-Trained Transformer) — yuppp! the real superheroes behind the scenes of modern AI, the secret sauce behind my witty responses, detailed explanations, and occasional attempts at humor (like this one😉).

Let’s break it down, step by step, in a fun and easy way. By the end, you’ll not only know what Transformers are but also feel ready to casually drop “self-attention” into conversations at parties (or at least impress your dog).

So what exactly is a Transformer? 🤖

A transformer isn’t a robot in disguise (sorry, Optimus Prime fans). If I have to say in simplest terms what a transformer is, I would say that: It is a type of deep learning model designed to process and understand text. It’s like the super-smart kid in class who can read a book and instantly know what it’s about — summarizing, translating, or even answering questions about it.

Think of Transformers as translators who don’t just translate word by word but understand the whole sentence, its meaning, and even its tone. For example:

Input: “It’s raining cats and dogs outside!”

Output: (Transformer brain says): “Oh, they mean it’s raining heavily, not actual animals falling from the sky.”

They can also generate text, summarize documents, and even write poetry (though they’re not great with rhymes).

Transformers were originally introduced in the paper Attention is All You Need by Vaswani et al. in 2017. They’ve revolutionized Natural Language Processing (NLP) and have powered models like GPT, BERT, and ChatGPT.

But what makes Transformers so special? Let’s break it down.

The Secret Sauce: Self-Attention ♨️

The transformer’s secret superpower is attention, specifically self-attention. We can say “self-attention” is the heart of transformers. Here’s where the magic 🪄happens. Let’s use an example to understand:

Suppose you’re reading this sentence:

“The cat, which was sitting on the mat, saw the dog.”

When you read “cat,” you instinctively link it to “sitting on the mat” and “saw the dog.” Your brain doesn’t just read words in order — it jumps around, picking out context. That’s exactly what self-attention does. It allows the model to focus on the right words, no matter their position.

Without self-attention, an AI might treat this sentence like this:

“The. Cat. Which. Was. Sitting. On. The. Mat. Saw. The. Dog.”

With self-attention, the model can think:

“Okay, ‘cat’ is important here. Oh, and ‘dog’ caused something. Let’s connect the dots.”

Why Transformers? What’s the Big Deal? 🤷♂️

Before transformers, we had older models like RNNs (Recurrent Neural Networks) and LSTMs (Long Short-Term Memory Networks). These models were:

- Slow (like a snail crossing a freeway).

- Prone to forgetting earlier parts of long sentences.

- Limited in their ability to process data in parallel.

Then came transformers, with their flashy, efficient, parallel-processing abilities. They turned the AI world upside down, much like how smartphones replaced flip phones.

Transformers? They handle all this with ease:

- They read all parts of a sentence simultaneously (parallel processing!).

- They “remember” connections over long text spans.

- They scale beautifully with large datasets.

The Transformer Recipe 🧑🍳

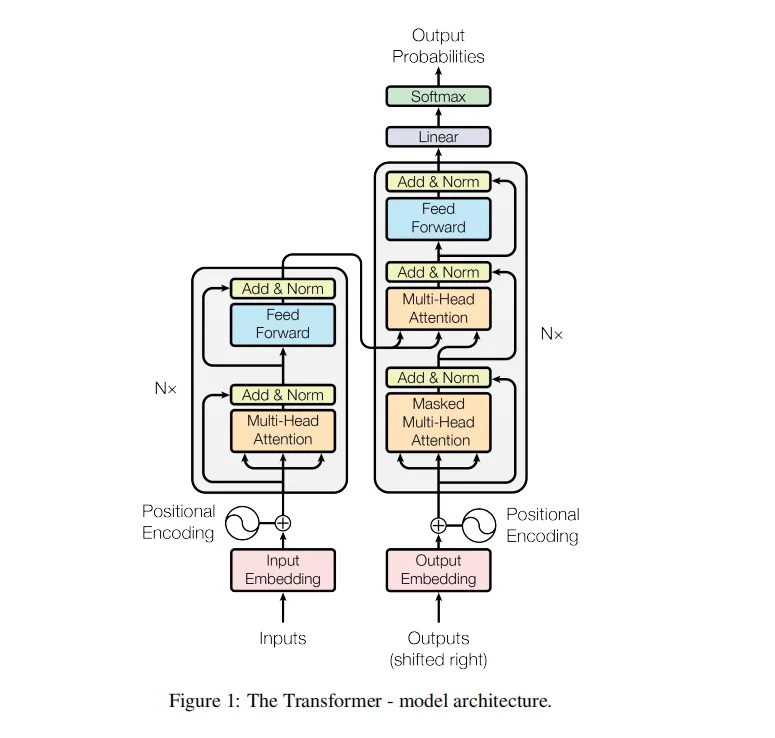

A transformer has two main parts:

-

Encoder: Think of this as your brain when you listen to someone. It processes the input (text) and understands its meaning. (It’s like Sherlock Holmes carefully examining every clue.🕵️)

-

Decoder: Now, this is like your brain when you respond. It takes the processed input and generates something useful, like a translation or a summary. (This is Dr. Watson narrating the solution to everyone.)

Together, they’re like the dynamic duo🫂of text processing.

In tasks like translation, the encoder understands the source language (say, English), and the decoder produces the target language (like French).

Breaking Down the Transformer Architecture 🛠️

a. Encoder-Decoder Structure

<< we just discussed this above: i.e the Encoder Part and Decoder Part >>

b. Self-Attention

Here’s where the magic 🪄 happens. Self-attention helps Transformers understand which words in a sentence are related. Let’s say you have the sentence:

“The elephant saw the mouse, and it ran away.”

Who ran away? The mouse or the elephant? Self-attention helps the model figure out that “it” refers to the elephant.

c. Positional Encoding

Words in a sentence have an order, and Transformers need to know that. Positional encoding acts as the GPS, letting the model know the sequence:

“She loves chocolate” isn’t the same as “Chocolate loves she.”

Real-Life Examples

Transformers are everywhere, making our lives better in subtle and not-so-subtle ways:

a. ChatGPT

Ask ChatGPT about the weather, and it’ll respond intelligently. It’s powered by Transformers, making it great at understanding context.

You: “Tell me a joke.”

ChatGPT: “Why did the math book look sad? Because it had too many problems!” 😂

b. Google Translate

Type “Hola” into Google Translate, and it says “Hello.” Thanks to Transformers, it’s not just swapping words but interpreting the entire meaning.

c. Summarization Tools

Ever wished you could condense a 20-page report into a single paragraph? Transformers do that without breaking a sweat.

A Glimpse into the Future of Transformers ⏳

With transformers evolving rapidly, the future is brimming with possibilities. Imagine:

- Real-time language translation devices.

- AI-generated movies and books.

- Smarter personal assistants who know you better than your best friend.

Transformers are just getting started. The possibilities are endless, and transformers are driving us toward this exciting horizon.

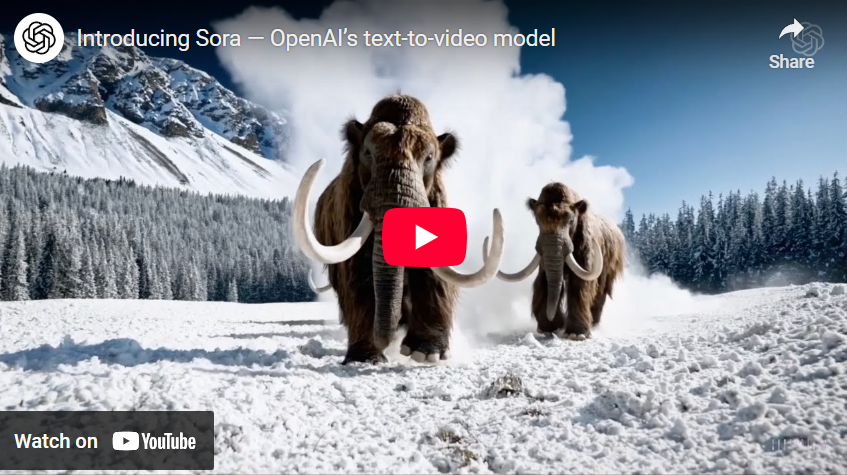

Recently, Open AI launched Sora, which is a text-to-video generative AI model. That means you write a text prompt, and it creates a video that matches the description of the prompt. Here’s an example from the OpenAI site:

Conclusion: Transformers — Changing the Game ✨

Transformers have changed the AI game. They’re fast, accurate, and adaptable. Transformers are the unsung heroes of modern AI. They power tools we use daily, from chatbots to translation apps, and make tasks like summarizing text or generating creative content feel magical. And while they might not save the world like Marvel superheroes, they’re making our lives easier, one prediction at a time.

And now you know what the “T” in ChatGPT stands for. Cool, right?

Now, the next time someone brings up AI, you can casually say, “Oh, you mean Transformers? Love how self-attention works.” Trust me, you’ll sound brilliant.

Ohhh..Wait wait wait…Wait a minute! I have something to share with you before you leave. This blog is just a simple layman’s description of Transformers. A more detailed blog on the Transformers is COMING SOOOON. Stay Tuned!

*Spoiler for you: The title of my next blog will be: “Attention Is All You Need Paper Implementation"*📝

Okay! Ciao Ciao!! 👋 Thank you for taking your precious time here. I hope you enjoyed reading about the Transformers! Questions or any comments? Drop it below — because learning is always better when it’s a two-way street. 🚀

Keep exploring, Keep learning, and Keep embracing the possibilities of AI.

If you liked this article ❤, feel free to drop your views at any of my socials.

Follow me on my socials!