Software is changing (again)!

A few days ago, I was watching Andrej Karpathy’s talk at Y Combinator’s AI Startup School, and something he said really stuck with me:

“The hottest new programming language is English.”

It sounds wild at first, right? But if you’ve been keeping up with how large language models (LLMs) are changing things, it starts to make a lot of sense. We’re not just adding AI to our apps anymore. We’re rethinking the very foundation of how software gets built.

And no, this isn’t just another tech trend. It’s a real shift - the kind that only comes around once in a generation.

"Welcome to the era of Software 3.0" 🔥

~ TLDR; 📄

Running short on time? No worries - here’s a quick summary of the blog to get you caught up! 😉

- Software is undergoing a paradigm shift.

- In the era of Software 3.0, English is the new programming language, powered by Large Language Models (LLMs).

- We’re moving from hard-coded logic (Software 1.0) and data-trained models (Software 2.0) to natural language interfaces that simulate human cognition.

- LLMs aren’t perfect-they’re “people spirits” with brilliance and flaws, so the future lies in partial autonomy, human-AI collaboration, and building dual interfaces for both users and AI agents.

- Those who master all three software paradigms and rethink their infrastructure will lead the next wave of innovation.*

Now that you’re all caught up, here’s where the deep dive begins! 👇

~ A Software Paradigm Shift: What’s Actually Changing?

The way we interact with computers is evolving again. But this time, it’s not about flashy UI updates or faster processors. It’s about how we tell machines what to do.

Most people still see LLMs as fancy chatbots. That’s a mistake. While many are debating whether to bolt ChatGPT onto their app, others are quietly using these models to rebuild entire systems - faster, easier, and with fewer engineers.

The reason? Software is moving into a new paradigm shift. The kind of shift that happens maybe twice in seventy years.

~ The Evolution of Software: From 1.0 to 3.0

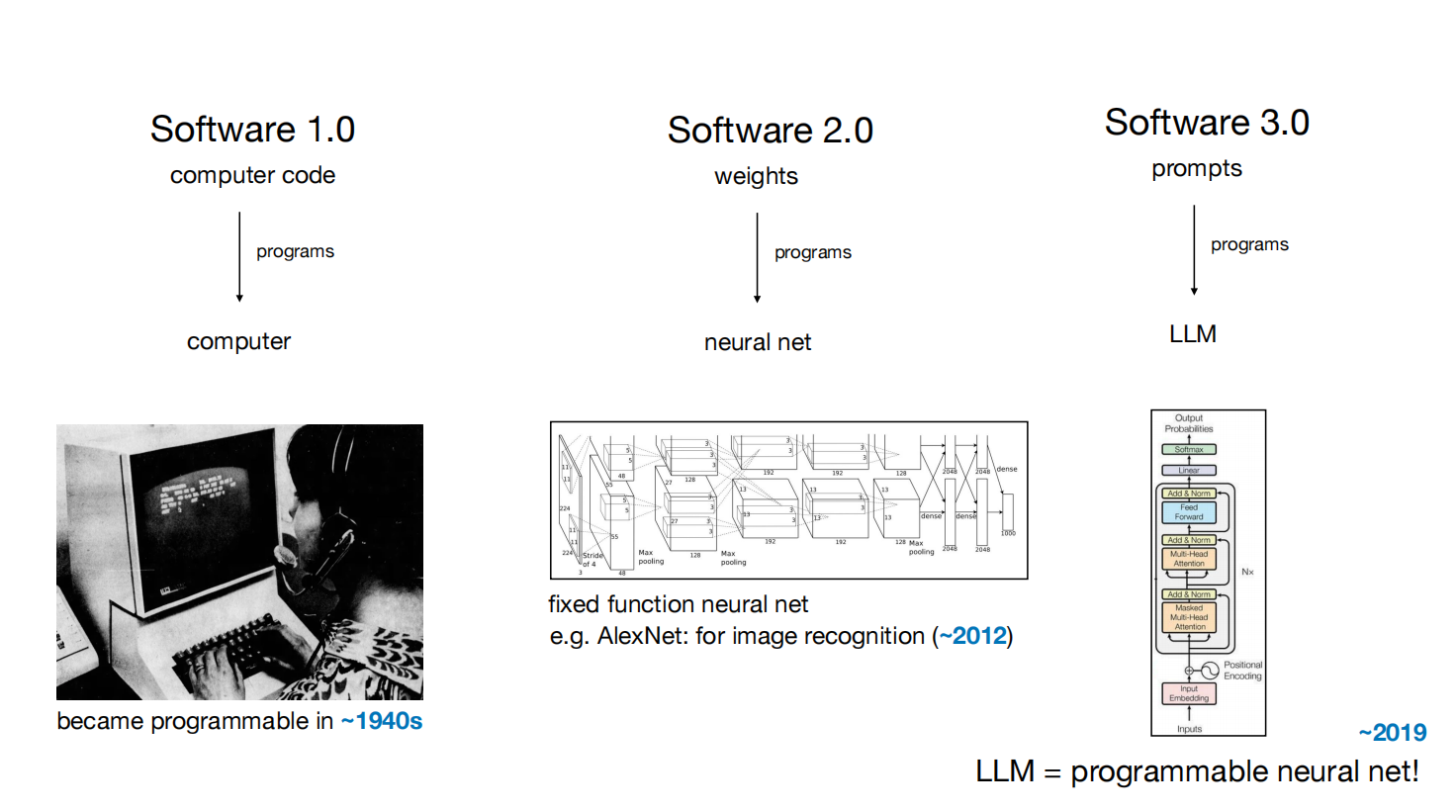

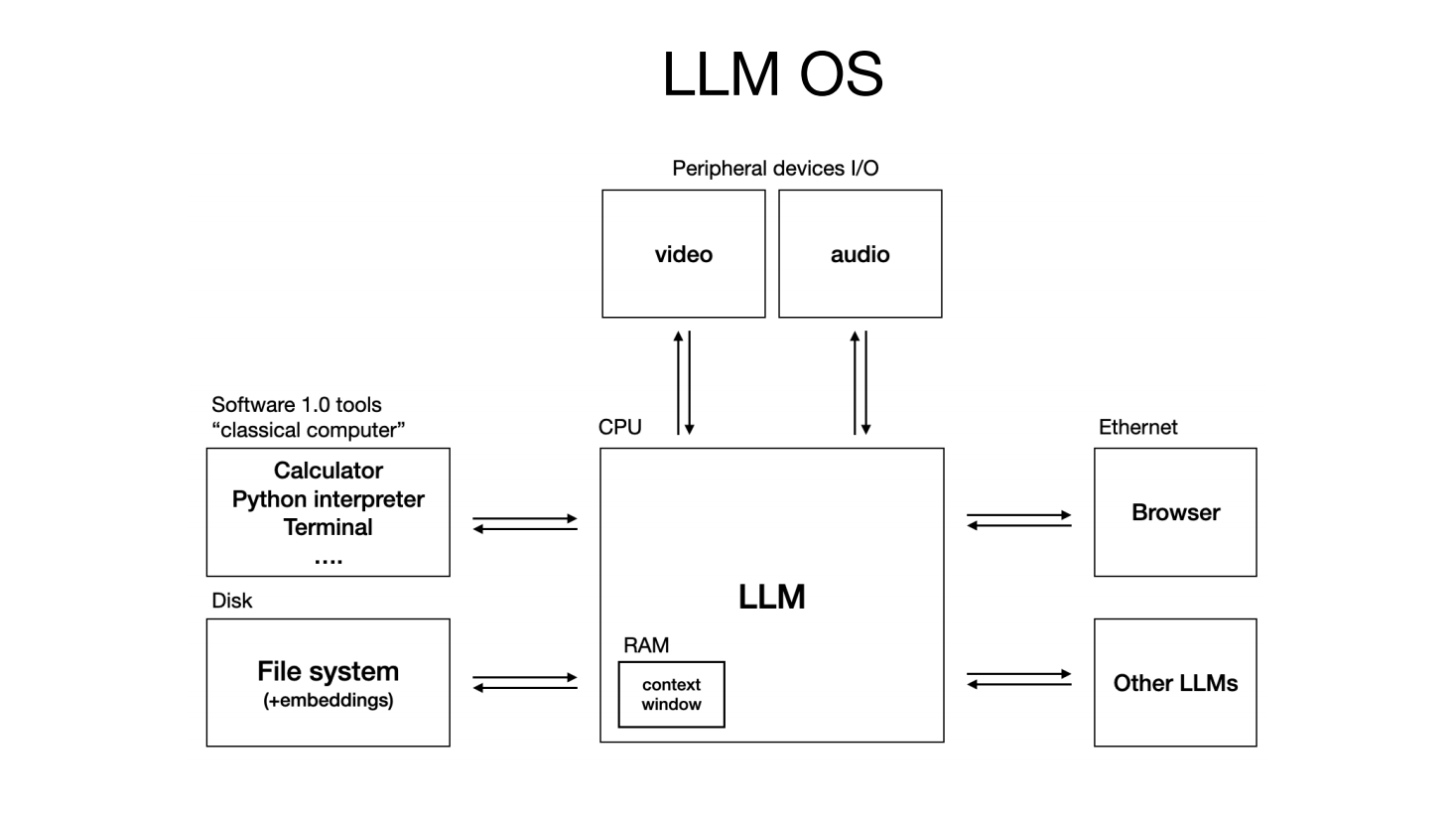

To understand Software 3.0, it’s essential to trace the evolution of software paradigms.

-

🧱 Software 1.0 ~ [Traditional Coding]: This is the world that most developers grew up in. You write specific instructions in languages like C++, JavaScript, or Python. Every function, every loop - it’s all manually crafted. This approach gave us everything from the web to video games. It’s powerful, but labor-intensive.

-

🧠 Software 2.0 ~ [Learning from Data]: Then came neural networks. Instead of writing out all the logic, you feed a system a bunch of data and let it figure things out. The “code” becomes the trained model - a bunch of mathematical weights. This made things like facial recognition and voice assistants possible. But it required serious expertise in machine learning.

-

🗣️ Software 3.0 ~ [Prompting in Natural Language]: Now we’ve reached a new level. The language of programming is English. The "code" is a prompt. The LLM interprets your intent and executes on it.

A perfect example - for sentiment analysis, you can write hundreds of lines of Python code, train a neural network on labeled data, or simply prompt an LLM: "Analyze the sentiment of this text." Same result, radically different approach.

This shift is already underway, with companies rewriting software stacks to leverage LLMs, impacting how software is developed and consumed.

~ I Tried It Myself...

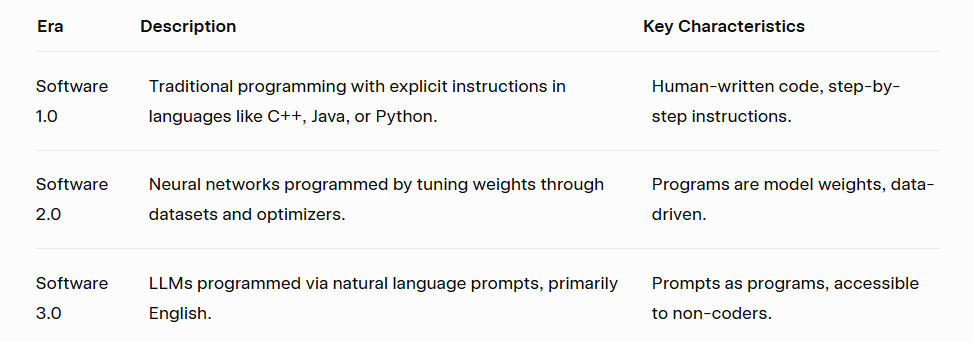

I decided to test this "hype" myself. Without knowing the inner workings of an AI text summarizer, I asked Cursor AI to help me build a simple summarization tool. In two days, I had something usable. And with some tweaks and playing around, I made a full-fledged app with absolutely - "No Manual Coding"

You can try out the demo here: Click!

You can try out the demo here: Click!

I’m a Computer Science Engineer, so I’m still comfortable writing code. But even I was surprised by how much I could do with just clear instructions in English. It made me realize - if you can describe what you want well, you can build it.

The old gatekeepers: syntax, debugging, frameworks - aren’t as important anymore. What matters now is "Clarity of Thought."

~ LLM Psychology: Understanding "People Spirits"

LLMs are often misunderstood as mere chatbots or databases, but they are far more complex. Karpathy describes them as "people spirits"—stochastic simulations of human behavior trained on vast digital text. They exhibit human-like psychology with both superhuman capabilities and human limitations:

-

Strengths: LLMs can recall obscure facts, perform complex reasoning, and generate creative solutions. They excel at tasks like solving intricate math problems or generating code for sophisticated applications.

-

Limitations: They can hallucinate, make logical errors (e.g., insisting 9.11 > 9.9), and suffer from "digital amnesia," unable to retain knowledge across sessions without external memory aids like ChatGPT's Memory feature.

This jagged intelligence profile necessitates a new approach to product development. Companies must design systems with human oversight to mitigate errors. For instance, while an LLM can generate code rapidly, a human must review it, especially in critical applications.

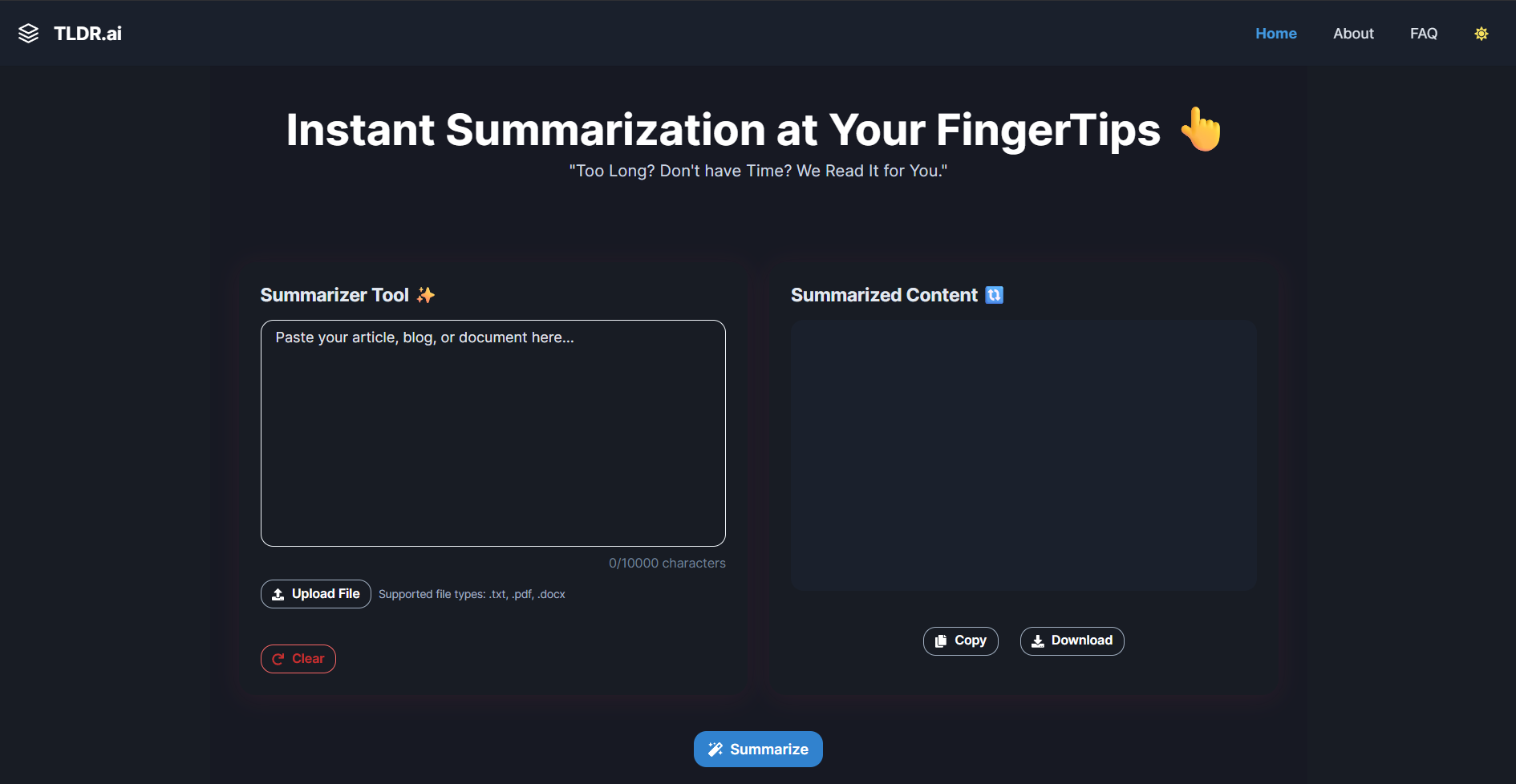

~ LLMs as the New Operating System (OS)

- Think of the "LLM" as the "CPU", central processor of Software 3.0.

- The "context window" is your "RAM", your working memory.

- "Prompting" is your "interface".

The cloud is your shared compute environment-just like mainframes in the ‘60s.

We’re in a pre-personal AI era. Most LLMs are still too costly to run locally, so we interact with them via API. But history tells us this won’t last. Just as personal computers replaced time-sharing mainframes, we’ll see LLMs move closer to users.

And the ecosystem? It’s shaping up fast. We’ve got closed systems like OpenAI and Anthropic, and open alternatives like LLaMA. It’s Linux vs. Windows all over again—but this time, for intelligence.

Recent outages from major LLM providers have shown us just how reliant businesses are becoming on this infrastructure. An “intelligence brownout” is no longer theoretical.

~ Partial Autonomy: The Only Viable Strategy

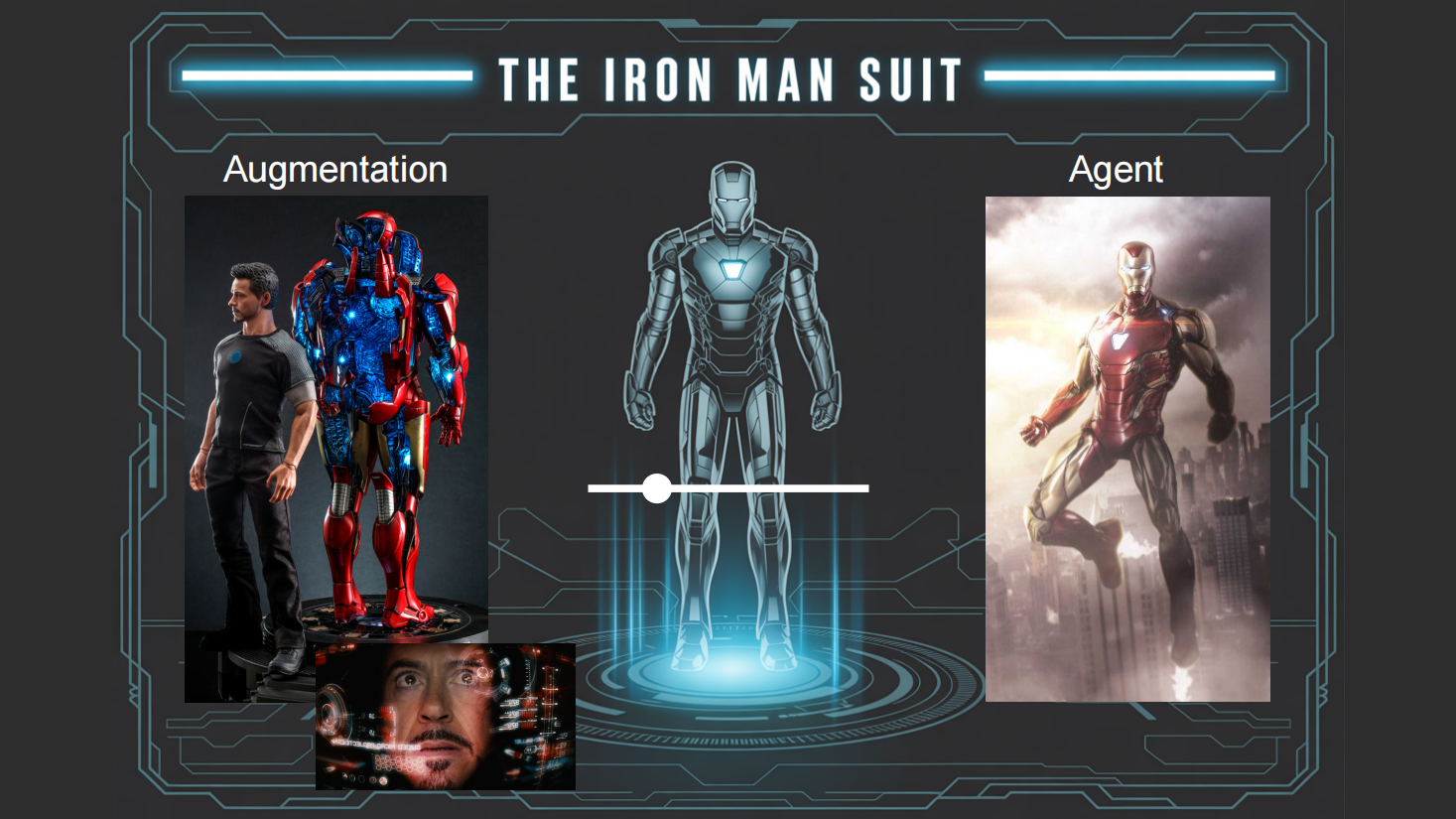

While the idea of fully autonomous systems is appealing, achieving complete autonomy in complex, real-world scenarios remains challenging. The autonomous vehicle industry, for example, has yet to achieve level 5 autonomy despite significant investment and effort over the past decade.

Let’s be honest: that vision is far from ready.

Just look at self-driving cars. After years of promises and billions in funding, we’re still not at full autonomy. Why? Because real-world automation is incredibly complex.

Karpathy’s advice? Focus on partial autonomy. Build systems with adjustable levels of delegation.

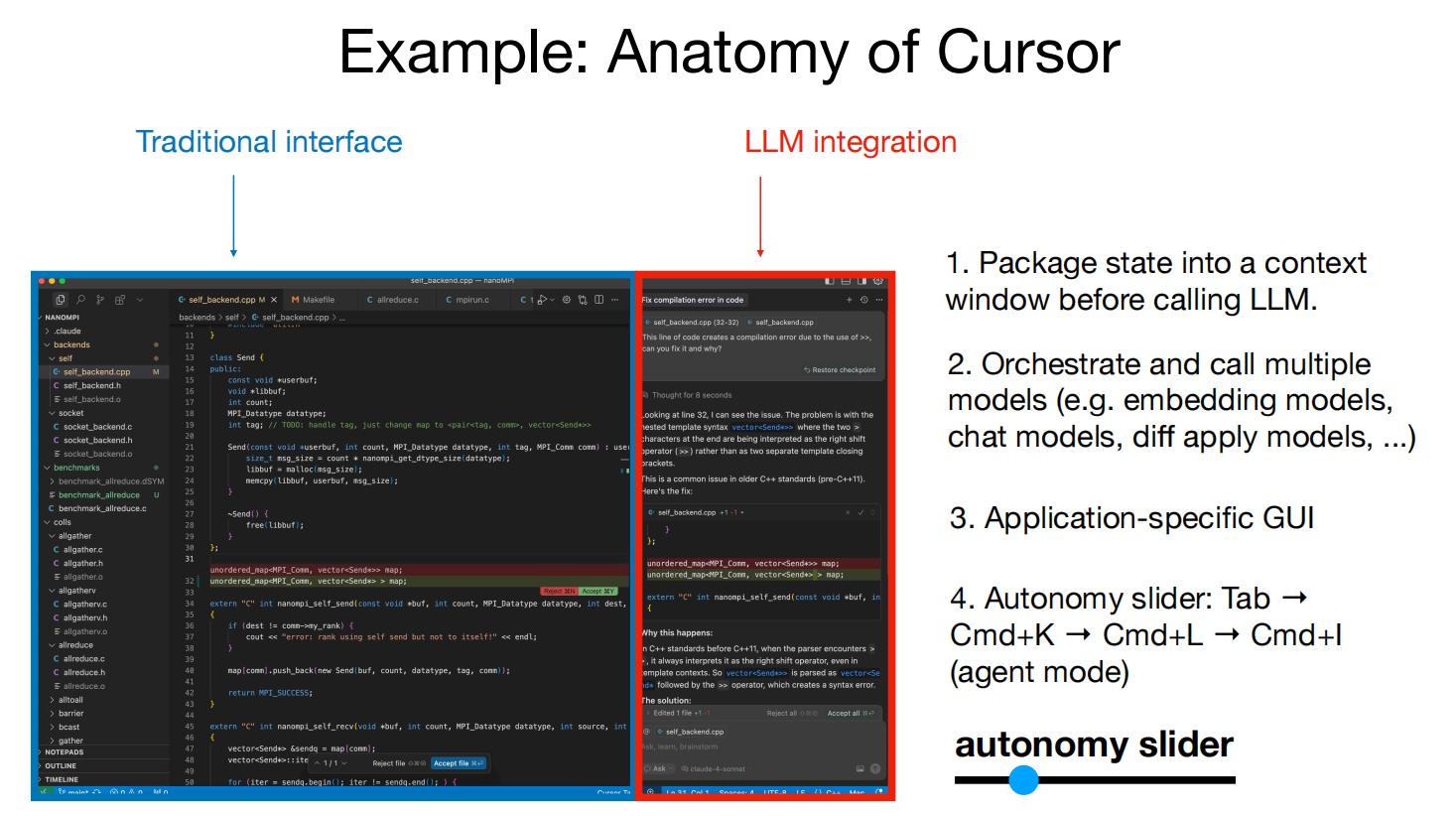

Cursor is a perfect case study:

- Want AI to autocomplete some code? Sure.

- Want it to rewrite a block with your guidance? Go ahead.

- Trust it to redo a whole file? That’s your choice.

Users control the risk-reward balance. Because at the end of the day, it’s still a human’s responsibility to verify the output. You can’t skip the audit step.

~ Rebuilding Infrastructure for AI Agents

While the world debates AGI, something just as transformative is happening under the radar. As LLMs and AI agents become more prevalent, the infrastructure supporting digital services must evolve to accommodate this new class of users.

Think about it like this: we now have three distinct categories of digital information consumers.

👤 Humans

- Interface: Graphical User Interfaces (GUIs)

- Needs: Intuitive, visual interactions

🤖 Programs

- Interface: Application Programming Interfaces (APIs)

- Needs: Structured, machine-readable access

🧠 AI Agents

- Interface: Hybrid of GUIs and APIs

- Needs: Programmatic access with human-like adaptability

This necessitates dual interfaces:

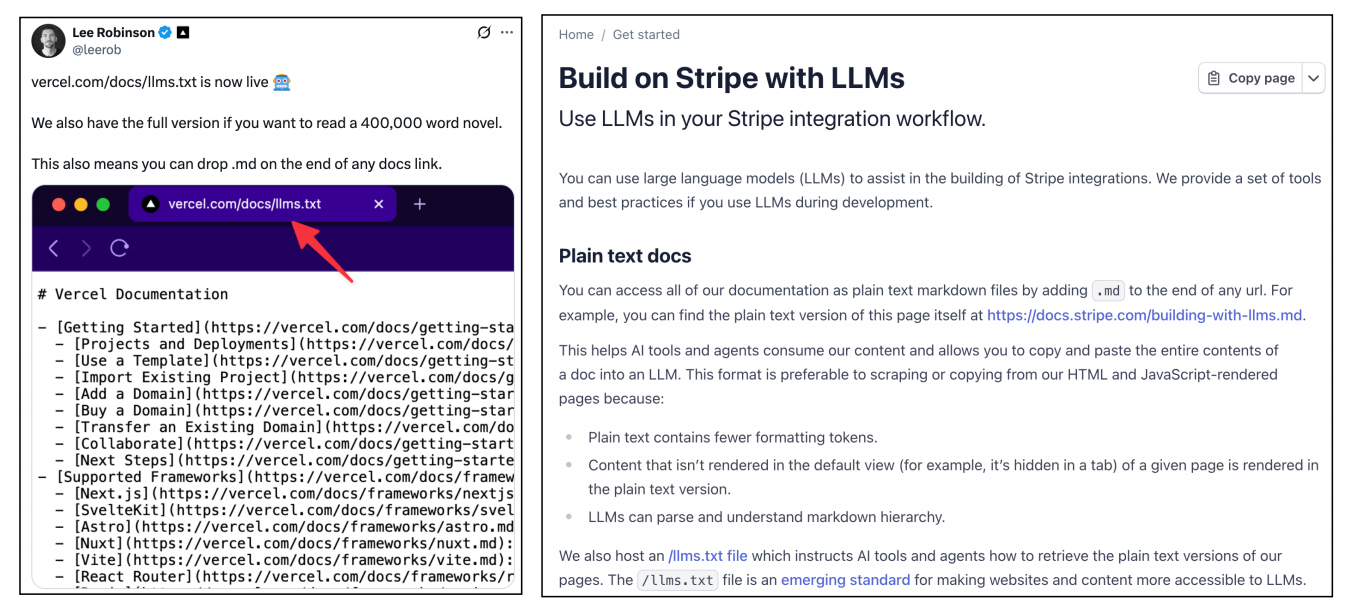

- LLM-Friendly Documentation: Companies like Stripe and Vercel use markdown formats for LLM compatibility.

- API Accessibility: Replacing "click this button" instructions with API calls that agents can execute.

- llm.txt Files: Simple, readable service descriptions for LLMs, as seen with tools like Gitingest.

These adaptations enable AI agents to interact seamlessly with digital services, driving new AI-driven applications. This isn’t about replacing human interfaces. It’s about building dual interfaces- one for humans, another for machines. Companies that embrace this duality will win the AI-native future.

~ Navigating Software 3.0: A Survival Guide

To navigate this transformative era, individuals and organizations must adopt several strategies:

- Master All Three Paradigms: Don't pick sides. Software 1.0, 2.0, and 3.0 aren't replacing each other – they're layering on top of each other. You'll need traditional coding for performance-critical systems, neural networks for pattern recognition, and LLM prompting for flexible automation. The professionals who understand when to use each approach will dominate this transition.

-

Build Iron Man Suits, Not Iron Man Robots: Stop chasing fully autonomous agents. They don't work reliably, and they won't for years. Instead, build augmentation tools with autonomy sliders. Let users control how much they delegate to AI. Focus on making humans more capable, not on replacing them entirely.

-

Optimize for Speed of Verification: The bottleneck isn't AI generation – it's human verification. Design interfaces that make it trivial to audit AI output. Visual diffs, clear approval workflows, granular control. The faster humans can verify AI work, the more valuable your product becomes.

-

Dual-Interface Everything: Every product needs two interfaces now: one for humans, one for agents. Add LLM-friendly documentation. Replace "click here" with API calls. Make your systems programmatically accessible through natural language. The companies that do this first will capture the AI-native market before their competitors even understand what's happening.

~ Final Thoughts

Karpathy’s YC AI Startup School talk is a compelling manifesto for the next decade of software. We are at an inflection point where:

- Software 3.0 empowers developers and non-developers alike through prompt programming.

- LLMs act as utilities, OSes, and creative collaborators, not just black-box APIs.

- Partial autonomy and agents are realistic, impactful avenues, not AGI pipe dreams.

As software developers and designers, our challenge is to close the demo-to-product gap, design for agent interaction, and create reliable interfaces between humans and intelligent systems.

The revolution isn’t coming. It’s already here-written in prompts, delivered via API, and guided by humans.

🔎 References: